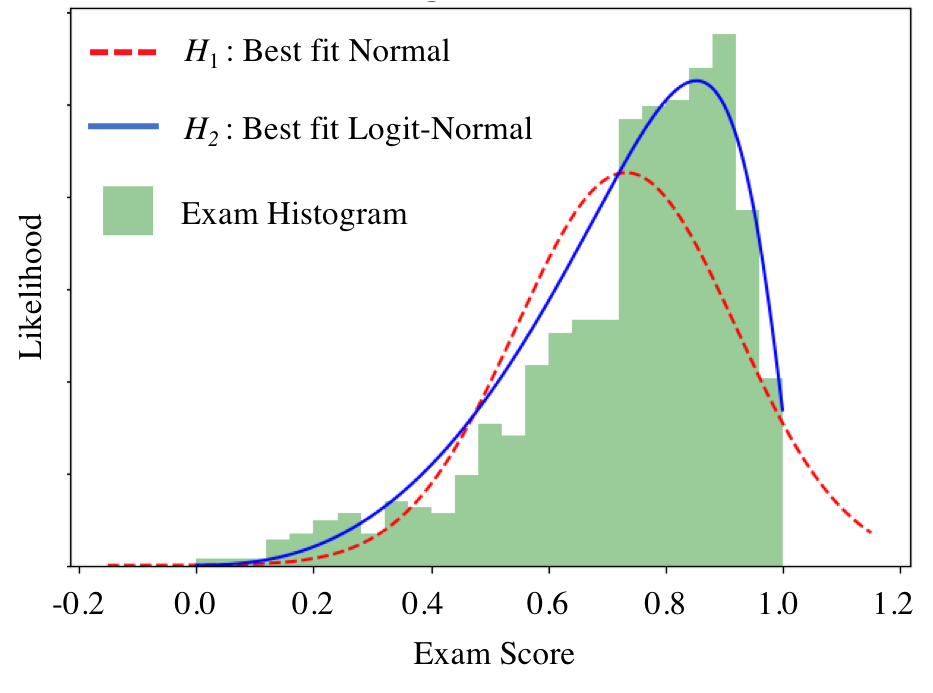

Grades are Not Normal

Sometimes you just feel like squashing normals:

The logit normal is the continuous distribution that results from applying a special "squashing" function to a Normally distributed random variable. The squashing function maps all values the normal could take on onto the range 0 to 1. If $X \sim \text{LogitNormal}(\mu, \sigma^2)$ it has: \begin{align*} \text{PDF:}&& &f_X(x) = \begin{cases} \frac{1}{\sigma(\sqrt{2\pi})x(1 - x)}e^{-\frac{(\text{logit}(x) - \mu)^2}{2\sigma^2}} & \text{if } 0 < x < 1\\ 0 & \text{otherwise} \end{cases} \\ \text{CDF:} && &F_X(x) = \Phi\Big(\frac{\text{logit}(x) - \mu}{\sigma}\Big)\\ \text{Where:} && &\text{logit}(x) = \text{log}\Big(\frac{x}{1-x}\Big) \end{align*}

A new theory shows that the Logit Normal better fits exam score distributions than the traditionally used Normal. Let's test it out! We have some set of exam scores for a test with min possible score 0 and max possible score 1, and we are trying to decide between two hypotheses:

$H_1$: our grade scores are distributed according to $X\sim \text{Normal}(\mu = 0.7, \sigma^2 = 0.2^2)$.

$H_2$: our grade scores are distributed according to $X\sim \text{LogitNormal}(\mu = 1.0, \sigma^2 = 0.9^2)$.

Under the normal assumption, $H_1$, what is $P(0.9 < X < 1.0)$? Provide a numerical answer to two decimal places.

Under the logit-normal assumption, $H_2$, what is $P(0.9 < X < 1.0)$?

Under the normal assumption, $H_1$, what is the maximum value that $X$ can take on?

Before observing any test scores, you assume that (a) one of your two hypotheses is correct and (b) that initially, each hypothesis is equally likely to be correct, $P(H_1)=P(H_2)=\frac{1}{2}$. You then observe a single test score, $X = 0.9$. What is your updated probability that the Logit-Normal hypothesis is correct?